Advanced AI Solutions for Manufacturing - Industry 4.0 for Every Environment

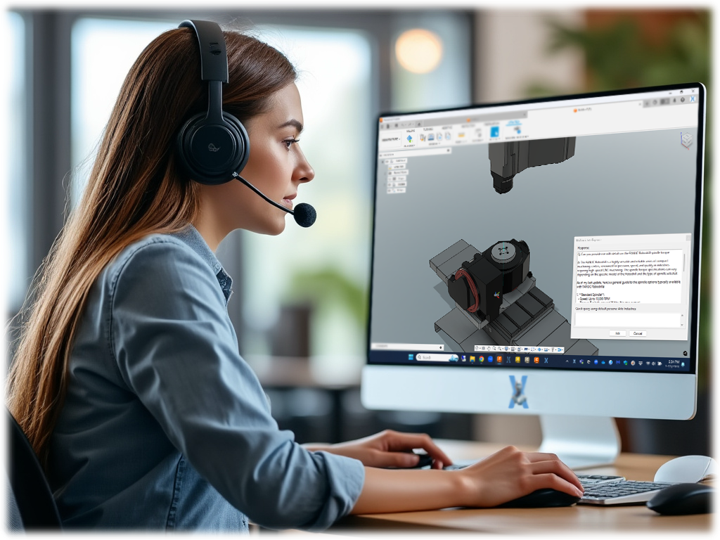

"Two complementary applications from Multiaxis Intelligence - empowering both cloud-enabled shops and security-conscious manufacturers operating in air-gapped systems. Together, they deliver a personalized AI assistant and a zero-trust offline data retrieval system, combining advanced AI with mission-critical protection - without disrupting operations."